Expectation vs. Reality. AI In Smart Management: What’s Next?

We are delighted to invite Kuei to PowerTalk today. Kuei serves as the AI Director at PowerArena, where his primary focus lies in the fields of computer vision and image processing. With an extensive academic background, Kuei possesses profound knowledge and expertise in utilizing limited image information to reconstruct objects and spaces, overcoming challenges such as light or shadow limitations. This enables him to achieve highly accurate object recognition and object tracking, among other applications. Kuei’s expertise in computer computing extends to various industries, including electronics manufacturing and AI-based in-vehicle software. Given his vast knowledge and experience, Kuei is an ideal guest for this episode.

The topic we have eagerly wanted to discuss is artificial intelligence, a concept of great breadth and significance. With the recent surge in OpenAI’s advancements, people have developed diverse expectations and interpretations of AI. Therefore, I would like to invite Kuei to shed light on the fundamental concept of AI and offer insights on how to approach its understanding. Additionally, we would appreciate Kuei’s expertise in elaborating on computer vision and deep learning, which are frequently associated with AI.

Kuie: AI has a rich history in the field of computer science, spanning over 50 years if we adhere to a strict definition. The earliest development can be traced back approximately 70 years ago. However, despite its longevity, AI is still perceived as falling short of the public’s expectations. Many customers find that AI systems often have limitations and do not function as anticipated. This suggests a gap between the current state of AI development and the general understanding of the technology.

The initial concept of AI aimed for complete intelligence—an entity capable of perception, judgment, and decision-making. Achieving this level of intelligence has proven to be challenging. The Turing test, a well-known assessment, evaluates whether a person or machine can interact with humans convincingly. Deep learning, with its foundations in neural networks, has made significant progress in recent years. Neural networks simulate the operations of human nerves and decision-making processes, aligning with our own neural networks. This concept has been explored for several decades, and three pioneers, often referred to as the “fathers of AI,” began working on these ideas in the early years.

Hardware limitations have historically posed challenges for training neural networks, with the process often taking over a month. Verifying experimental designs and rectifying mistakes required substantial time investment. Consequently, the practical application of neural networks remained restricted to the academic realm due to these hardware constraints. Recent breakthroughs in graphics card technology have revolutionized AI. Training times that once spanned over a month can now be completed within a week, resulting in remarkable advancements and research progress. This breakthrough offers genuine opportunities for applying AI in practical environments. Previously, the protracted training process combined with the hardware limitations hindered the timely decision-making abilities of AI systems, rendering them impractical in real-world scenarios. The interplay between software and hardware in computer science is often evident—new hardware advancements facilitate software development, but as software progresses, it eventually encounters limitations imposed by the hardware. Once further hardware breakthroughs occur, software can advance once again. Many concepts, like AI, have existed for years, but hardware constraints prevented their full realization and advancement. This summarizes the developmental journey of artificial intelligence.

When discussing AI, it is important to consider its current capabilities. While the notion of AI includes the entire decision-making process, what AI can presently achieve and excel at is the perception aspect. Although progress has been made in areas such as generative AI, true creativity is still elusive. AI can accumulate vast amounts of past information and generate similar outputs based on past experiences. Nonetheless, this represents a significant technological breakthrough compared to the past. The recent breakthroughs in graphics card hardware have played a pivotal role in advancing AI, albeit with increased hardware requirements. The complexity of tasks AI can perform correlates with the demand for hardware resources. This serves as the present reality of AI—a higher degree of complexity necessitates greater hardware capabilities. In various fields, AI replaces human definitions and judgments that were previously relied upon for computer-related tasks and decision-making. Traditional machine learning involved defining specific attributes, like shape and color, based on available camera inputs. However, deep learning has brought about a change, enabling computers to find abstractions through neural networks. The development of explainable AI in recent years has enabled some understanding of the abstract concepts learned by computers, albeit still at a relatively early stage. In the past few years, we have witnessed the development of AI that can handle abstract aspects that are difficult for humans to define precisely. Our environment undergoes constant change, which is challenging to comprehensively define. For instance, the reflection of an object’s color

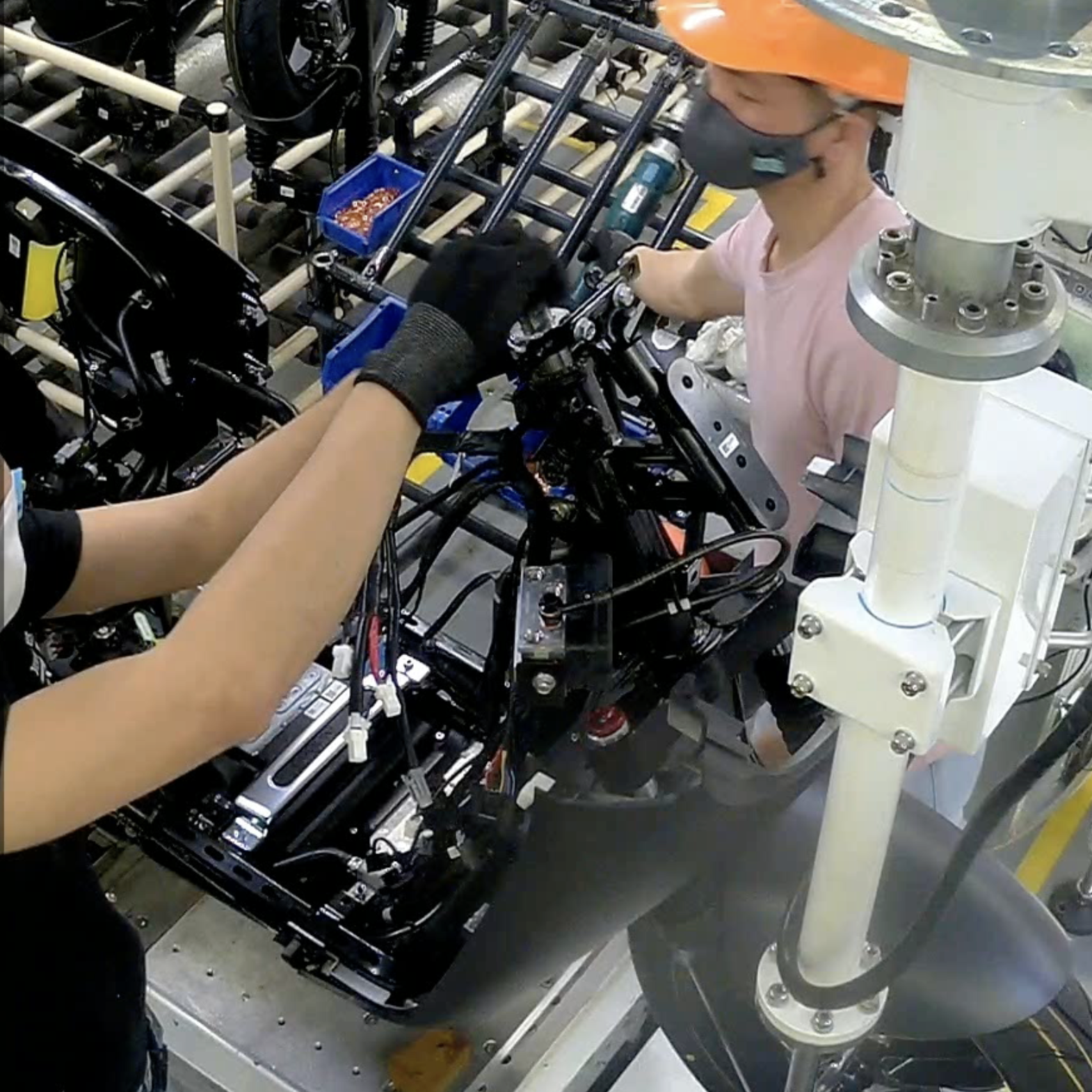

The perception aspect of AI holds significant importance in its current development. However, this perception is inherently limited as it lacks the human senses of touch, taste, and smell, which contribute to our holistic understanding of objects. Refocusing on AI vision for perception, let’s explore its deployment in the manufacturing field and factory production lines. As previously mentioned, the manufacturing environment entails numerous abstract variables. So, what are some of these variables in a factory setting?

Kuei: I recall that the concept of Industry 4.0 revolves around digitizing factories, where every aspect within the facility is captured by cameras. While this is an ideal scenario, implementing cameras can provide a comprehensive view not only of production status but also of safety and transportation processes. This enables better control over issues such as production line disruptions due to material shortages or delays in delivery routes, ultimately improving overall efficiency. Complete digitalization allows for the identification and control of such inefficiencies, enhancing productivity. However, there are challenges to consider.

One of the challenges is the limitation of fixed cameras. These cameras make it difficult to zoom in and out, preventing a detailed view of the entire production situation or specific areas. To address this, numerous large and small cameras may be required, similar to the approach taken in Amazon’s unmanned stores. Factories, however, often have even more areas that need monitoring, leading to questions about the number of cameras that should be deployed and whether the factory’s infrastructure can support such a setup. Network capabilities, storage capacity, and the availability of servers and graphics cards for AI usage all incur costs. Factories operate within limited budgets, and the expenses and time required to set up such infrastructure pose limitations.

Given these challenges, establishing priorities becomes essential. In my opinion, the readiness of the infrastructure is crucial. Many factories have reported issues with WiFi connectivity, so ensuring a stable network is essential. Additionally, having sufficient storage capacity to store past data is vital. In many cases, identifying problems through AI may not be necessary, as the production history, where everything is recorded, can provide valuable insights. Analyzing the recordings can uncover discrepancies between reality and expectations, as human decision-making can sometimes deviate from the intended process. This system allows factories to identify reasons for stagnant production efficiency and yield rates.

Using AI in this context incurs costs, but it can streamline routine tasks. By leveraging AI learning, factories can focus on critical points and delegate routine monitoring to AI systems, essentially acting as a robot overseeing workers 24/7. For instance, if 80% of workers consistently skip step 2, AI can be trained to detect and monitor compliance with that specific step. Annotations and records in the production history allow for easy verification of whether a particular step was completed.

In summary, while implementing comprehensive camera systems in factories may pose challenges, focusing on infrastructure readiness, ensuring stable connectivity, and sufficient storage capacity are crucial priorities. By leveraging AI, factories can address inefficiencies, gain insights from production history, and optimize routine processes, ultimately improving overall productivity and yield rates.

As you mentioned, achieving the ideal state where a factory has abundant budget and well-equipped hardware, along with a perfectly designed layout from the outset, would greatly benefit from AI implementation. However, it’s important to acknowledge that many production lines face limitations due to pre-existing engineering constraints. In the context of AI training, the concept of annotation plays a significant role. So, what exactly is annotation, and how does it relate to managing the human variable?

Kuei: Most AI algorithms primarily rely on supervised learning, which can be likened to a teacher instructing students. Annotation plays a vital role in this process, akin to creating a textbook to teach AI about various objects. For example, by annotating an apple, we provide AI with the knowledge that such objects are called apples. AI can initially recognize apples based on this annotation. Thus, annotation is of great importance.

The design of neural network methods involves specific reasons, closely tied to the annotation process. Regardless of the environment, be it day or night, sunny or rainy, apples remain apples. However, AI cannot learn about these variations unless it has encountered them before. We can think of AI as a 3-year-old child, continuously learning and evolving. The purpose of annotation is to assist AI in recognizing and understanding objects.

In practice, we often encounter situations where materials may initially appear white, but after a month of training, their color changes to orange. AI, not having seen this new color before, may struggle to recognize it. However, with advancements in AI technology, we can introduce changes to enable AI to learn and adapt to such variations, such as recognizing red apples and green apples as part of the apple category. While this may not be feasible currently, progress can be made in the future.

In general, this process of AI learning is known as MLOps, or machine learning operations. It can be compared to the growth of a very young rookie in a factory who, over the course of 50 years, becomes a seasoned factory manager capable of handling numerous changes. Similarly, AI undergoes a learning process, accumulating experience and improving its models. Through this process, AI becomes better equipped to handle new situations and changes. For instance, in the field of self-driving cars and universal object detection, AI has demonstrated remarkable learning capabilities, but it is essential to provide it with the necessary annotations to learn about unfamiliar objects or scenarios.

When a factory decides to implement AI vision, it is essential to recognize that the AI system, like a 3-year-old child, requires sufficient time to learn and acquire the knowledge of the production line to meet the desired goals.

Kuei: Indeed, cross-field cooperation can present challenges due to the distinct nature of AI in computer science and the industrial engineering and manufacturing domain. These fields have inherent gaps that need to be bridged for successful collaboration. The key objective of cross-field cooperation is to overcome these gaps and foster integration between the two areas. One major hurdle lies in the different languages spoken in each field. Finding common ground and shared understanding is crucial. How can we effectively communicate and integrate these different perspectives and knowledge domains? Only by working together can we harness the power of AI to create smart manufacturing solutions.

Communication stands out as the most difficult yet vital aspect of cross-field cooperation. It requires active engagement, open dialogue, and a willingness to understand and appreciate the unique insights and expertise each field brings to the table. By fostering effective communication, we can facilitate the exchange of ideas, knowledge, and requirements, ultimately enabling successful cross-field collaboration and the realization of AI-driven smart manufacturing.

As an AI director, what advice would you offer to a manager who is preparing to implement AI vision in their operations?

Kuei: The primary focus should be on identifying the core problem at hand. It is common for the general public to hold misconceptions about AI, and even our customers may have their own expectations of how AI can address their specific issues. However, it is essential to recognize that there might be misunderstandings about the capabilities and costs associated with AI. For instance, a solution that costs 20 million U.S. dollars may be unattainable if the budget is only 20 million Taiwan dollars. In such cases, it is crucial to maintain a realistic perspective.

As professionals in the field of AI, we possess the correct understanding of its capabilities and limitations. With this knowledge, we can provide our customers with various options for problem-solving. It is crucial to pinpoint the key problem and focus our efforts on addressing it. By doing so, we may be able to resolve 80% of their issues without incurring the entire cost. The goal is to achieve significant progress by investing only a fraction of the resources required for a comprehensive solution.

The primary focus should be on understanding the key challenges faced by managers on their production lines. It is crucial for managers to have a clear awareness of these challenges. However, it’s important to note that not all challenges necessarily require the implementation of AI vision. In fact, in some cases, we may even recommend alternative solutions to address certain issues. By strategically applying AI vision to critical areas where it can have the most impact, managers can achieve the most effective and progressive results.

Kuei: Based on our previous experiences, customers often express a desire to monitor SOP. However, it is crucial to question the true necessity of such comprehensive monitoring. Many production processes already incorporate fool-proof measures to mitigate risks. Instead, we should focus on identifying areas where fool-proof measures may be insufficient or situations where errors could result in significant losses. These are the aspects that warrant our attention the most. Monitoring excessively subtle steps or relatively inconsequential ones that do not pose significant risks may not be effective or efficient in terms of resource allocation.

Factories with labor-intensive processes and a high-mix low-volume production often face unique challenges. In light of this, what advice would you offer these factories considering that the training and maintenance of AI models may be closely tied to their current production line conditions?

Kuei: The extent of variability depends on how it is defined. It could encompass differences in appearance or minor factors such as varying voltages. Providing us with such changes during the early stages of training allows AI to recognize and differentiate between different instances. Computer vision can also assess whether AI can effectively learn and comprehend these variations. AI can expand its knowledge by continuously progressing through training. However, it is important to note that training AI takes time.

A recurring issue we encounter is that AI may perform well initially but encounter difficulties in subsequent months. Eventually, budget constraints may hinder its maintenance, leading to its discontinuation. Unfortunately, we would prefer to see AI deployed and operating for the long term. Long-running operations inevitably encounter changes, with some factories even engaging in different activities during morning and afternoon shifts. Due to the low unit price of their products, it may not be cost-effective for them to utilize AI extensively for monitoring purposes. They may prioritize evaluating production and planning for the upcoming year. It is crucial for factories to clearly communicate their requirements and what aspects they want AI to assist with during the planning stage. Additionally, the level of variation within the factory should be assessed comprehensively. By considering these factors, a more comprehensive evaluation can be conducted, allowing for the long-term utilization of AI on the production line.

In order to facilitate the seamless deployment of AI, managers should provide comprehensive information to data scientists at the onset of the implementation process. This will enable them to maximize the value and quantity of data available, resulting in smoother AI training procedures.

Kuei: To put it differently, a long-term plan is essential. The process can be continuously updated, allowing for incremental implementation. Some updates may be irreversible, and irrelevant old data can be eliminated. It resembles the process of training an employee, which is not a short-term endeavor. Similarly, training AI follows a similar trajectory. When introducing AI, it is preferable to view it as a long-term commitment that does not require extensive upfront costs for the entire factory. Instead, starting with a single production line allows for gradual implementation and assessment of its efficiency. Over time, AI will continually improve. As it becomes established on the production line, the frequency of updates will decrease. Initially, updates may occur weekly, but as AI accumulates more knowledge, updates may transition to monthly or even quarterly intervals. In the future, updates may be necessary primarily when introducing new and diverse products.

The longer a factory is willing to commit to a long-term plan and invest both financial resources and effort into AI implementation, the greater the value it can derive from AI over time.

Kuei: AI surpasses the limitations of traditional computer vision because it can adapt to changes. However, before AI can effectively handle these changes, it needs to learn and familiarize itself with them. The reason why AI may not perform optimally is that it hasn’t encountered a specific situation before. Moreover, if AI is intentionally prevented from observing certain objects or events, it will be unable to react accordingly. Regardless of the sensor used, the target must be within the sensor’s range for detection to occur. This represents an apparent limitation and a common scenario. The goal is to deploy AI in an environment over an extended period so that it can continuously observe and learn, enabling it to be truly useful.

For instance, when people’s hands block the camera or obscure objects, AI cannot detect them. Therefore, if AI cannot see an object, it cannot provide assistance.

Kuei: For instance, when managers want to monitor the activities of workers, they observe closely. If there are obstructions that hinder their view, they instruct the workers to move their hands or objects away. However, AI lacks the capability to perform such actions independently, so human assistance is necessary. By assisting AI in these tasks, it can subsequently assist managers in making informed judgments.

Therefore, it is essential to provide AI with an optimal working environment. It is common for people to wonder why humans can perform certain tasks while AI struggles with them. However, we often overlook the fact that humans can employ various methods, such as verbally asking workers to move their hands away, to overcome such obstacles.

Kuei: AI can’t do it, so you have to help it. You can set up a good environment for it.

I am intrigued to hear your thoughts on the future development of AI vision in the manufacturing industry. What are your expectations for its progress and potential advancements?

Kuei: Currently, I am still exploring this issue and seeking a deeper understanding. There are already numerous applications of AI, particularly in terms of enhancing production efficiency and quality in factories. Many people are utilizing AI for tasks such as AOI (Automated Optical Inspection) or error detection. These applications largely align with traditional computer vision uses in the factory domain. Previously, these applications were not as effective or faced significant limitations. However, AI has strengthened their capabilities, making them practically applicable. Nevertheless, we are currently limited to this stage.

Looking towards the future, it is crucial for everyone to contemplate the implications. With an increasing amount of controllable data and an overarching view of the entire factory, we need to consider what judgments to make and what actions to take. Sometimes, the minute details we obsess over may not be the crucial 80% of the problem that deserves our focus. For instance, if we observe that 30% of employees consistently gather in a particular area, it might indicate an issue with the production line design that attracts people to that spot. Similarly, if we consistently notice product accumulation at specific locations, it could indicate a bottleneck in the process. We don’t need to scrutinize every single detail. Through quantity statistics and tracking specific items, we can gain insights into the flow of the production line.

One challenge we face is recording 1,000 abnormalities per day but lacking the time to thoroughly address each one. It prompts the question of whether it is necessary for the factory to examine every single abnormality or if we can devise a plan through extensive analysis. This situation highlights the impact of knowledge from different fields. Frequently, factories are accustomed to utilizing simple methods and handling small datasets. When confronted with significant amounts of data, they struggle to determine the most effective approach. Therefore, we must engage in conversations about how to best utilize these resources. It becomes a creative process.

In the past, when such knowledge could not be readily applied, we did not create these management tools. In the future, collaboration becomes the key to overcoming challenges and making progress.

We have talked about the future development of AI vision and what it can do. Does an IE need to worry about being replaced by AI vision? How can factory managers anticipate the ways in which AI vision can contribute to achieving smart manufacturing goals?

Kuei: According to Mr. 陳維超, when he joined Inventec, his initial focus was on communicating with factories who expressed concerns about being replaced. In my view, active AI implementation may not be feasible within the next 5-10 years. Let’s consider self-driving cars as an example. Currently, self-driving technology is predominantly at level 2, with plans for level 3 and higher but no immediate implementation in sight. It appears that AI will serve as a crucial productivity tool, particularly as an auxiliary component, over the next 5-10 years. It has the potential to be a valuable assistant for IE.

With the introduction of AI vision to factories, the number of “eyes” available to observe things multiplies significantly. While you still have a single brain, you need to strategize how to effectively utilize these additional perspectives. If it proves helpful to you, we can explore how AI can assist in observing the specific elements you require. Auxiliary AI aims to save your time by locating relevant information, while final judgment is left to a professional IE.

In the past, when identifying issues on a production line, you had to invest time in calculations for a mere suspicion. However, in the future, data recording and AI analysis can quickly pinpoint key areas of concern. You may be able to accomplish this simply by sitting in front of a computer. The more we train AI, the better understanding we gain, ultimately saving us valuable time.

The crucial aspect of auxiliary AI lies in understanding user needs. Users must effectively communicate their requirements to developers, as this is a vital part of the development process. Naturally, our goal is to continuously enhance AI capabilities and strive for improvement. This is the primary objective of our work. Reflecting on the mention of annotation and AI model training, these elements play an important role in the process.

I frequently come across discussions emphasizing the importance of accuracy in AI models. How significant is accuracy for both factory managers and data scientists? Is a higher level of accuracy always indicates a better model?

Kuei: Accuracy can be viewed at two levels. The first level pertains to technical terms used by AI developers, such as accuracy, precision, and recall, which determine the model’s overall accuracy. However, these technical measures do not necessarily reflect the actual application of the model. In practical terms, when we engage in general object detection tasks, we focus on analyzing images frame by frame. If we can process 30 frames per second and miss half of them, we still have 15 frames remaining. Therefore, we design our product based on the accuracy of the model. Even if the accuracy is not exceptionally high, it can still be sufficient for the application at hand.

This accuracy assessment is intertwined with logical considerations beyond just the AI component. The design process involves formulating upper-level logic that aligns with the environment and the specific objectives of what needs to be observed. The accuracy of the final product is determined by its relevance to the desired outcome. Consequently, the accuracy of the application itself carries greater significance than the accuracy of the model alone.

In the end, we still need to know where the problems are. Does the model successfully help to solve the problem? Accuracy is actually not that important. So we should not define AI model solely by accuracy.

Kuei: Accuracy should not be solely defined by a single model, especially as it is also related to performance. The more accurate you want a model to be, the larger it becomes, leading to diminishing returns. For example, a smaller model may achieve 90% accuracy, but to reach 99%, you might need a model that is thousands or even tens of thousands of times larger. This comes with significantly higher computational costs, which may exceed budget constraints and training capabilities. In reality, you may not necessarily need such high accuracy. When you reach 90%, there are still many tasks that can be accomplished. Through other design considerations such as UI/UX and software design, you can achieve what you desire even with an 80% to 90% accurate model.

I am very grateful to Kuei for his time today. He explained and clarified things for us in a very approachable way. Whether it’s comparing AI vision or AI models to 3-year-old children, we understand that they require time to train and feed them information on how to identify objects on the production line. However, the challenge we currently face is still related to hardware equipment and budget considerations, which are often overlooked but are crucial for achieving smart factories and digital transformation. These challenges are something that everyone will encounter.

Back to top